Deep Learning Framework for Human Computer Interaction based on Nvidia Jetson nano

April 18, 2022

Abstract

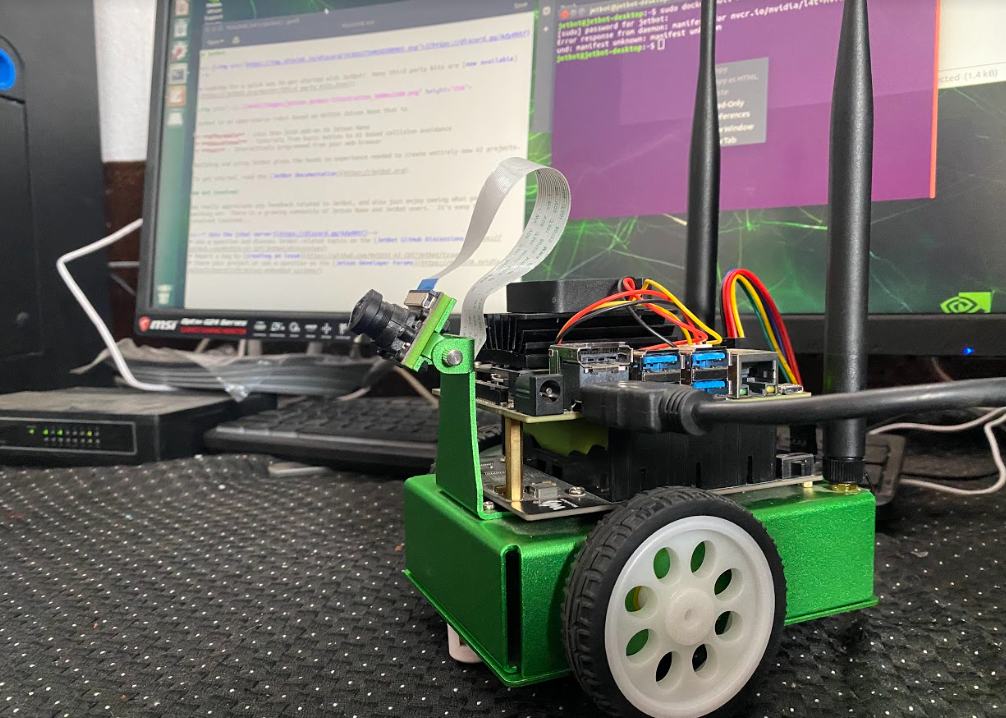

The successful use of hand gestures in human communication is being attempted to be replicated in computer vision systems. Human computer interaction (HCI) has developed and advanced, although not as quickly as other technological fields. Methods of manual, controller- or device-based communication are frequently employed. The connection between people and computers could be substantially improved by the creation of systems that can recognize different hand gestures. The initiative aims to improve, simplify, and enhance gesture-based machine control. The hardware portion of the project uses the Nvidia Jetson Nano-based AI bot, which is operated by hand gestures. As one of the machine learning approaches designed specifically for image processing, CNN (Convolution Neural Network) is used in this project’s image processing algorithm to identify the hand motion. In the Bot, a flask server is constructed and configured. The robot has a camera on board. Real-time gestures from the webcam are read by a web page hosted on the flask server, which then sends the photos to the flask server. The homepage shows the feed from the bot’s camera, which aids in navigating the bot while it is out of sight. On the server, a machine learning model is configured, and after identifying the motions captured by the webcam, it outputs a command.The Bot then acknowledges the command and executes it by navigating as directed. In this project, we introduce a hand-gesture based control interface for navigating the Jetson Nano bot.

Introduction

Utilizing graphics processing units (GPU), deep learning-based object detection technology may effectively infer outcomes. However, processing capabilities are constrained when employing generic deep learning frameworks in embedded systems and mobile devices.[1] For human-computer interaction, gesture recognition is crucial.[2] Gesture recognition is the process of using mathematical algorithms to decipher human gestures. Applications like sign language translation make use of real-time gesture recognition. There are many hand gesture recognition technologies available today, however they only recognize fixed gestures. It has been established that deep learning is among the top options for computer vision. Many techniques have been created to enhance the functionality of conventional deep learning algorithms. Additionally, deep learning is a branch of machine learning that makes use of deep neural network theory and a neural network concept analogous to the human brain [3]. Image classification, object detection, natural language processing, and voice/speech recognition are the main subfields of deep learning. Convolutional neural networks, autoencoders, and sparse and restricted Boltzmann machines can all be used to classify these techniques. The major benefits of convolutional layers are that local connectivity teaches the links between neighboring pixels and the weight-sharing technique lowers the number of parameters. It is also independent of where the things are in the picture.

Although there have been several gesture-based control systems developed, they are cumbersome to operate and only support fixed movements. The project includes a feature that enables users to control the bot via unique gestures, ensuring that the HCI is open and usable by most users. To train the machine learning models, a code that enables users to provide unique training data is required. Real-time communication between the Jetson nanobot and the webpage required the construction of a bridge between them. A Flask server that was hosted by the Jetson Nano-based AI bot was created specifically for this purpose.

The objectives of our project are to provide a framework that enables users to create their own custom gestures for machine control, as well as to operate the Jetson Nano-based AI bot with hand gestures. This study aims to develop a real-time gesture classification system that can quickly recognize motions in environments with natural sunlight. This technique makes it simpler to manage automobiles and unmanned aerial vehicles. One of the main implementation objectives is to enable persons with disabilities to use their existing tools more easily by integrating these technologies into them. This project can be used by universities as a teaching toolkit.

Conclusion

A real-time video feed is made available online, the CNN model classifies the hand gestures in the image, and the Jetson Nano subsequently navigates using those hand gestures. While we are currently employing the Jetson Nano for our hardware, the primary goal of our project is to create a more effective communication system for human-machine interaction.

On the downside, we should point out that our system does not function in unstable environments, cannot be used for gesture recognition at distances greater than 3 meters, and does not perform well in poor lighting conditions.